But why? We can now decompose the predictions into the bias term (which is just the trainset mean) and individual feature contributions, so we see which features contributed to the difference and by how much. Predictions that the random forest model made for the two data points are quite different. Print "Instance 1 prediction:", rf.predict(instances) Print "Instance 0 prediction:", rf.predict(instances) Lets pick two arbitrary data points that yield different price estimates from the model. Let’s take a sample dataset, train a random forest model, predict some values on the test set and then decompose the predictions.įrom treeinterpreter import treeinterpreter as tiįrom ee import DecisionTreeRegressorįrom sklearn.ensemble import RandomForestRegressor You can check how to install it at Decomposing random forest predictions with treeinterpreter Note: this requires scikit-learn 0.17, which is still in development. Without further ado, the code is available at github, and also via pip install treeinterpreter Combining these, it is possible to extract the prediction paths for each individual prediction and decompose the predictions via inspecting the paths.

Fortunately, since 0.17.dev, scikit-learn has two additions in the API that make this relatively straightforward: obtaining leaf node_ids for predictions, and storing all intermediate values in all nodes in decision trees, not only leaf nodes. The implementation for sklearn required a hacky patch for exposing the paths. Unfortunately, most random forest libraries (including scikit-learn) don’t expose tree paths of predictions. I’ve a had quite a few requests for code to do this.

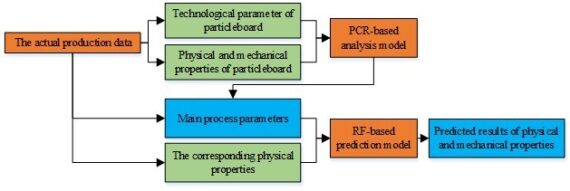

\(prediction = bias + feature_1 contribution + … + feature_n contribution\). In one of my previous posts I discussed how random forests can be turned into a “white box”, such that each prediction is decomposed into a sum of contributions from each feature i.e.

0 kommentar(er)

0 kommentar(er)